Projects

VIVE3D: Viewpoint-Independent Video Editing using 3D-Aware GANs

Paper presented at CVPR 2023

We extends the capabilities of image-based 3D GANs to video editing by introducing a novel GAN inversion technique specifically tailored to 3D GANs. Besides traditional semantic face edits (e.g. for age and expression), we are the first to demonstrate edits that show novel views of the head enabled by the inherent properties of 3D GANs and our optical flow-guided compositing technique to combine the head with the background video.

Webpage

Paper

Video

Code

@inproceedings{Fruehstueck2023VIVE3D,

title = {{VIVE3D}: Viewpoint-Independent Video Editing using {3D}-Aware {GANs}},

author = {Fr{\"u}hst{\"u}ck, Anna and Sarafianos, Nikolaos and Xu, Yuanlu and Wonka, Peter and Tung, Tony},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023}

}

title = {{VIVE3D}: Viewpoint-Independent Video Editing using {3D}-Aware {GANs}},

author = {Fr{\"u}hst{\"u}ck, Anna and Sarafianos, Nikolaos and Xu, Yuanlu and Wonka, Peter and Tung, Tony},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023}

}

InsetGAN for Full-Body Image Generation

Paper presented at CVPR 2022

We demonstrate the first viable framework to generate high-quality synthesized full-body human images at state-of-the-art resolution. The full-body human domain is very challenging due to the large variance in pose, clothing and identity. To capture the rich details of the domain, we define a canvas network that generates a human body and one or more specialized Inset generators that enhance specific image regions.

Webpage

Paper

Poster

Video

Code

@inproceedings{Fruehstueck2022InsetGAN,

title = {{InsetGAN} for Full-Body Image Generation},

author = {Fr{\"u}hst{\"u}ck, Anna and Singh, {Krishna Kumar} and Shechtman, Eli and Mitra, {Niloy J.} and Wonka, Peter and Lu, Jingwan},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2022}

}

title = {{InsetGAN} for Full-Body Image Generation},

author = {Fr{\"u}hst{\"u}ck, Anna and Singh, {Krishna Kumar} and Shechtman, Eli and Mitra, {Niloy J.} and Wonka, Peter and Lu, Jingwan},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2022}

}

TileGAN: Synthesis of Large-Scale Non-Homogeneous Textures

Technical paper presented at SIGGRAPH 2019

We generate large-scale textures by building on recent advances in the field of Generative Adversarial Networks. Our technique combine outputs of GANs trained on a smaller resolution to produce arbitrarily large-scale plausible texture map with virtually no boundary artifacts. We developed an interface to enable artistic control that allows user to create textures based on guidance images and modify and paint on the GAN textures interactively.

Webpage

Paper

Poster

Video

Code

@article{Fruehstueck2019TileGAN,

title = {{TileGAN}: Synthesis of Large-Scale Non-Homogeneous Textures},

author = {Fr\"{u}hst\"{u}ck, Anna and Alhashim, Ibraheem and Wonka, Peter},

journal = {ACM Transactions on Graphics (Proc. SIGGRAPH) },

issue_date = {July 2019},

volume = {38},

number = {4},

year = {2019}

}

title = {{TileGAN}: Synthesis of Large-Scale Non-Homogeneous Textures},

author = {Fr\"{u}hst\"{u}ck, Anna and Alhashim, Ibraheem and Wonka, Peter},

journal = {ACM Transactions on Graphics (Proc. SIGGRAPH) },

issue_date = {July 2019},

volume = {38},

number = {4},

year = {2019}

}

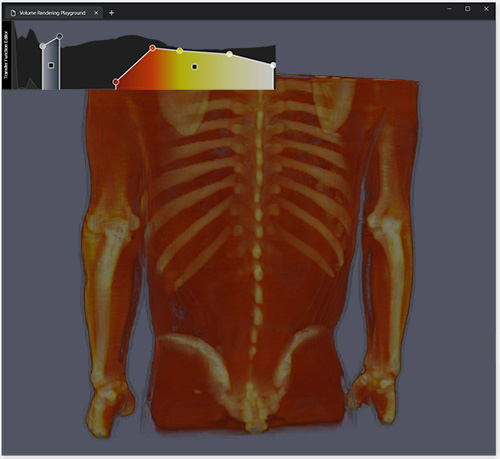

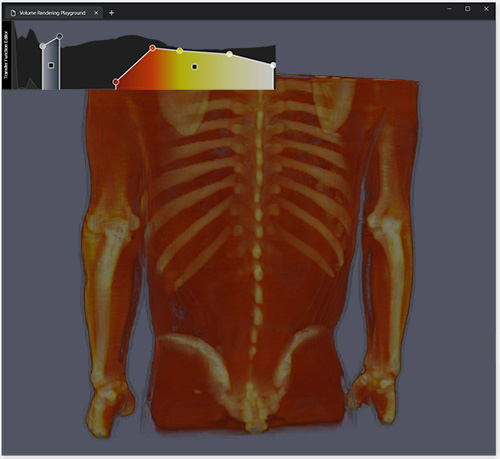

GPU-accelerated browser-based visualization

Traineeship at Surgical Planning Lab, Brigham and Women's Hospital (Harvard Medical School)

During my traineeship at Harvard Medical School, I was working on GPU-accelerated browser-based visualization software. I designed and developed a prototype for a browser-based volume rendering solution and user interface components for web-based imaging and developed algorithms for browser-based GPU processing through grid-based PDE solvers for 2D and 3D image segmentation. Some of this code was integrated with open-source visualization and medical imaging software 3D Slicer.Volume Rendering Demo 2D SDF Demo Documentation for Transfer Function Panel

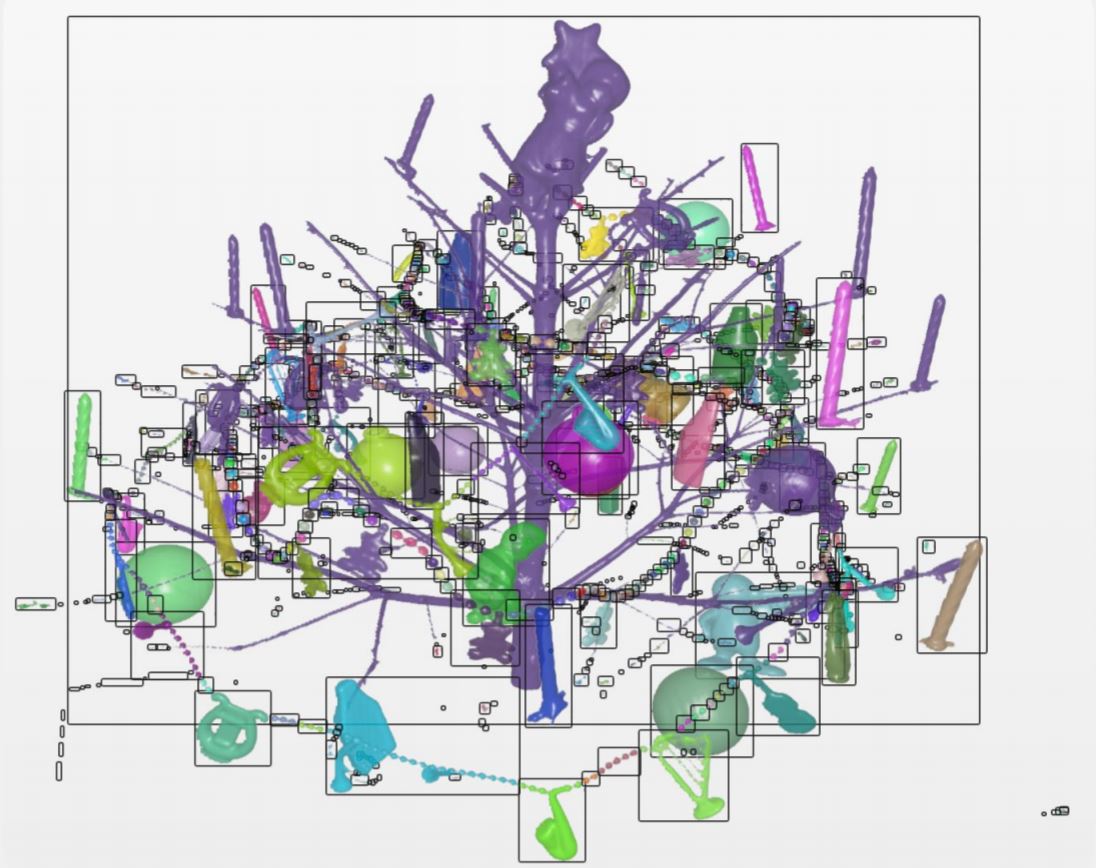

Volume Object Model for Remote Visualization

Master Thesis project at TU Vienna in collaboration with KAUST

In visualization applications with many objects, the rendering of the scene can be prohibitively expensive, thus compromising an interactive user experience. Our deferred visualization pipeline divides the visualization computation between a server and a thin client. The scene is preprocessed on the server and transferred to the client using an intermediate representation consisting of metadata and pre-rendered visualization of the scene's objects, where client-side interactivity is enabled even on large datasets.

Thesis

Poster

@mastersthesis{Fruehstueck2015DOM,

title = {Decoupling Object Manipulation from Rendering in a Thin Client Visualization System},

author = {Fr\"{u}hst\"{u}ck, Anna},

year = {2015},

month = Sep,

school = {Institute of Computer Graphics and Algorithms, Vienna University of Technology}

}

title = {Decoupling Object Manipulation from Rendering in a Thin Client Visualization System},

author = {Fr\"{u}hst\"{u}ck, Anna},

year = {2015},

month = Sep,

school = {Institute of Computer Graphics and Algorithms, Vienna University of Technology}

}