VIVE3D: Viewpoint-Independent Video Editing using 3D-Aware GANs

Anna Frühstück 1, 2, Nikolaos Sarafianos 2,

Yuanlu Xu 2, Peter Wonka 1 and Tony Tung 2

1 KAUST 2 Meta Reality Labs Research, Sausalito

CVPR, 2023

Abstract

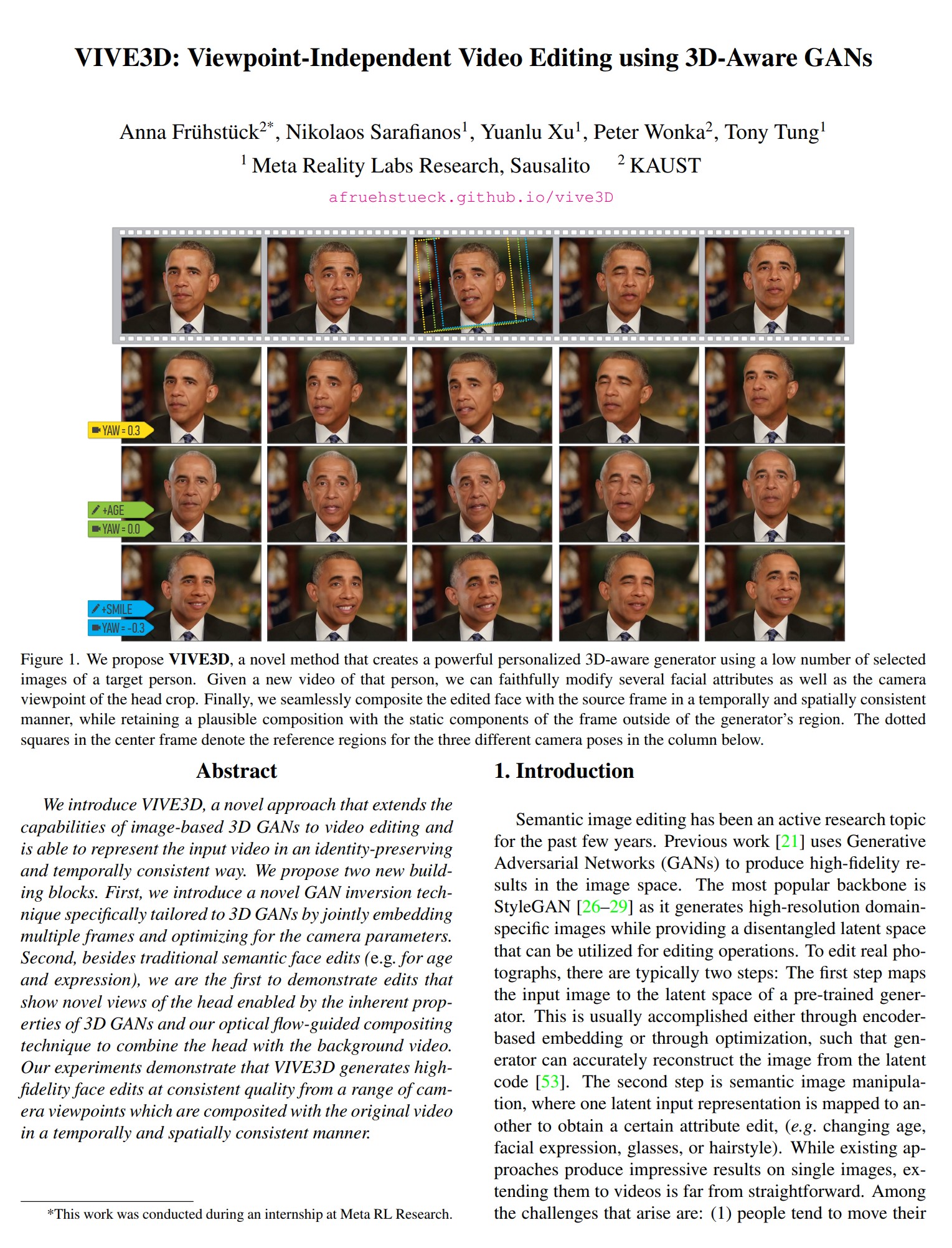

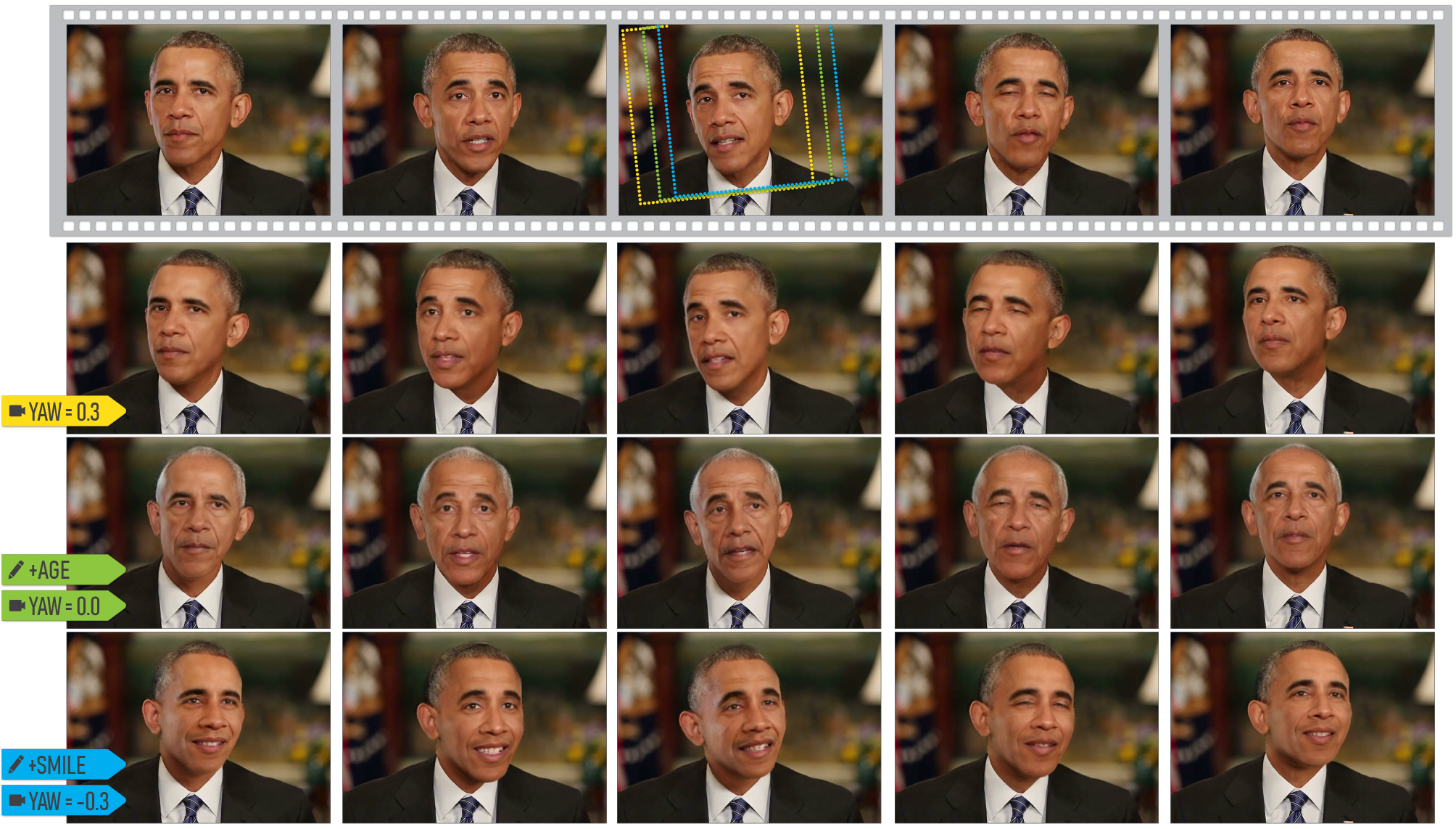

We introduce VIVE3D, a novel approach that extends the capabilities of image-based 3D GANs to video editing and is able to represent the input video in an identity-preserving and temporally consistent way. We propose two new building blocks. First, we introduce a novel GAN inversion technique specifically tailored to 3D GANs by jointly embedding multiple frames and optimizing for the camera parameters. Second, besides traditional semantic face edits (e.g. for age and expression), we are the first to demonstrate edits that show novel views of the head enabled by the inherent properties of 3D GANs and our optical flow-guided compositing technique to combine the head with the background video. Our experiments demonstrate that VIVE3D generates high-fidelity face edits at consistent quality from a range of camera viewpoints which are composited with the original video in a temporally and spatially-consistent manner.

Paper

@inproceedings{Fruehstueck2023VIVE3D,

title = {{VIVE3D}: Viewpoint-Independent Video Editing using {3D}-Aware {GANs}},

author = {Fr{\"u}hst{\"u}ck, Anna and Sarafianos, Nikolaos and Xu, Yuanlu and Wonka, Peter and Tung, Tony},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023}

}

Our Code is available on Github

Paper Video

Results

Our method produces natural head compositions for angular changes.

Results with Editing

Our method seamlessly combines traditional latent space editing techniques with the additional capabilities afforded by the 3D GAN.

Age Editing Comparison

We showcase our method in comparison to related methods for age editing.

Angle Editing Comparison

We showcase our method in comparison to related methods for editing of the head angle.